We knew it would happen - and it just did. The world's smartest computer just got smarter…

Many have been raving about ChatGPT's admittedly awesome capabilities since it was released late last year. And what they've been raving about - whether or not they realize it - is GPT-3.5, the large language model (LLM) that powers ChatGPT. GPT stands for Generative Pre-trained Transformer, a family of LLMs built by OpenAI and trained on massive swaths of text data to generate natural, human-like text in response to queries.

And it's its GPT that makes ChatGPT so smart.

OpenAI has just released GPT-4, its latest iteration of the large language model powering ChatGPT. And while it bests its predecessor pretty much across the board, there are four key areas in which this updated LLM is far more advanced. Those are creativity, safety, visual input, and extended context. Let's take a look at what that actually means.

Creativity

"GPT-4 can solve difficult problems with greater accuracy, thanks to its broader general knowledge and problem-solving abilities." - OpenAI.

OpenAI claims GPT-4 is more creative and collaborative than any previous LLM. Given all the training GPT-3.5 got from those billions of user interactions, it isn't surprising that GPT-4 would be that much more creative - and that's without even counting the formal training provided by OpenAI. Practice (and larger LLMs) make perfect.

So GPT-4 will be even better at generating, editing, and iterating creative content with users, whether music, poetry, prose, code, etc. And part of what makes this possible is the next point.

Extended Context

GPT-4 significantly raises the allowed text limit for user queries from only 3000 words to a whopping 25 000 words. And that helps it be more creative and resourceful. Raising the text limit enables users to be more creative as well. The queries they craft can be that much more complex. Large and more complex queries, coupled with GPT-4's broader knowledge, allow it to be more creative, iterative, and collaborative.

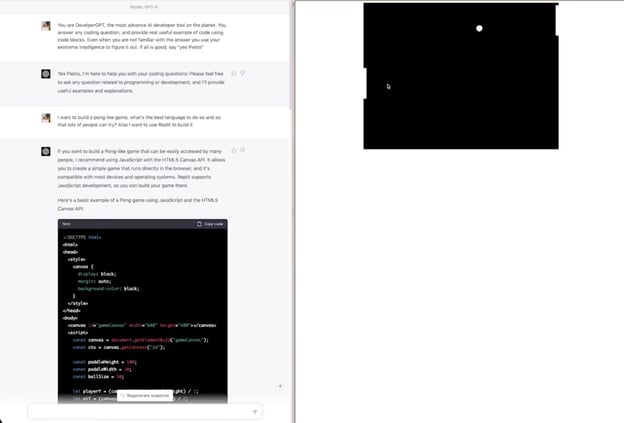

GPT-4 wrote a Pong-style video game in under 60 seconds - that's pretty creative and resourceful in anyone's book, wouldn't you say?

Safety

OpenAI also improved the tech's creativity regarding ethics and facts - it toned it down.

"We spent six months making GPT-4 safer and more aligned. GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations." - OpenAI

This was achieved by incorporating more human feedback into GPT-4's training and development. And a big chunk of that came from ChatGPT users providing feedback and simply using it. OpenAI also partnered with 50 experts from various backgrounds in developing GPT-4, namely, in AI safety and security.

But while that may be true, OpenAI makes it clear that GPT-4 is still capable of "hallucinations," like its older sibling GPT-3.5. The company noted that "GPT-4 still has many known limitations that we are working to address, such as social biases, hallucinations, and adversarial prompts. We encourage and facilitate transparency, user education, and wider AI literacy as society adopts these models. We also aim to expand the avenues of input people have in shaping our models."

Visual Input

This is likely the most significant advancement GPT-4 makes: visual data processing. Unlike any of its predecessors, GPT-4 can understand images, so users can interact with GPT-4 using visual inputs, like pictures.

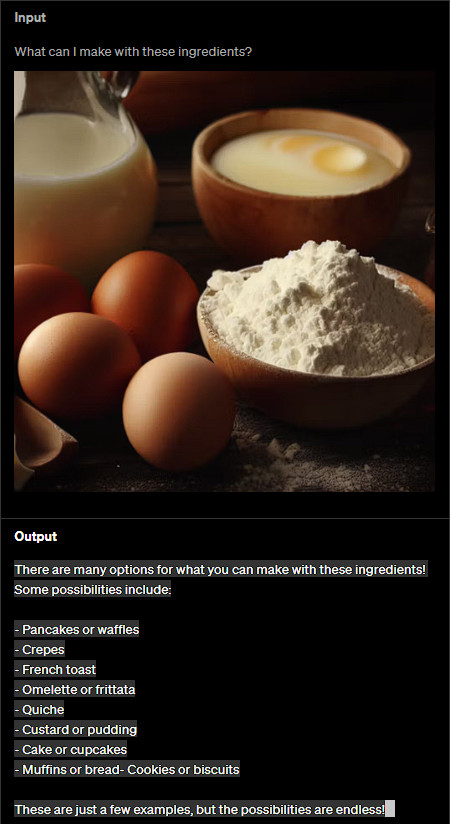

When I say GPT-4 understands images, I mean that it can recognize what is photographed (or otherwise represented in an image). OpenAI shared a screenshot of GPT -4's response when fed an image of ingredients and asked what could be made with the pictured ingredients.

The response was spot-on.

Brands Already On-board

While OpenAI launched GPT-4 just a few days ago, it turns out that it's already being used by some major brands worldwide. Not the least of which is Microsoft. GPT-4 has been powering Microsoft's "new Bing" chatbot for some time now.

And it isn't just Microsoft or Big Tech. Other early adopters of GPT-4 include Duolingo, the online language-learning service, which incorporated GPT-4 into its "Duolingo Max" subscription; Khan Academy, the online learning platform, is also building GPT-4 into "every student's customized tutor;" and Be My Eyes, an assistance app for the visually impaired.

That's not bad for a product that was released less than a week ago…

Wrap Up

That, in a nutshell, is GPT-4 and its upgraded capabilities. And you should stay tuned for more incremental updates like this one. OpenAI CEO, Sam Altman, stated in an interview with StrictlyVC that "a quick rollout of several small changes is better than a shocking advancement that provides little opportunity for the world to adapt to the changes."

And he's probably right.

GPT-4 is available now on ChatGPT Plus (the paid version of ChatGPT) and as an API for developers. You can try GPT-4 on ChatGPT Plus here or join the API waitlist here.

About Modev

Modev believes that markets are made and thus focuses on bringing together the right ingredients to accelerate market growth. Modev has been instrumental in the growth of mobile applications, cloud, and generative AI, and is exploring new markets such as climate tech. Founded in 2008 on the simple belief that human connection is vital in the era of digital transformation, Modev makes markets by bringing together high-profile key decision-makers, partners, and influencers. Today, Modev produces market-leading events such as VOICE of AI, ESG Tech Summit and the soon to be released Developers.AI series of hands-on training events. Modev staff, better known as "Modevators," include community building and transformation experts worldwide.

To learn more about Modev, and the breadth of events offered live and virtually, visit Modev's website and join Modev on LinkedIn, Twitter, Instagram, Facebook, and YouTube.